Table formats like Iceberg, Hudi, and Delta are receiving a lot of attention today. They have introduced a significant amount of convenience and efficiency to big data processing. However, to fully understand and appreciate table formats and their role in the big data ecosystem, we need to go back in time.

1. Hadoop MapReduce

The Hadoop MapReduce framework marked the beginning of the large-scale data processing era in open source. It was created based on a few of Google's papers, namely the GFS file system and the MapReduce computation paradigm, and was open-sourced at Yahoo in 2006. Its adoption started growing in areas requiring the processing of large amounts of data on commodity hardware, with advertising being one such area.

The technology wasn’t particularly easy to use or highly efficient. Despite that, it was revolutionary in what it enabled. This marked the beginning of large-scale data processing in the industry.

Regarding table formats, in Hadoop, there weren’t any tables as such. Hadoop worked on files stored in a distributed HDFS storage. As a result, processing went from a directory with a number of files as input to producing another directory with a number of files. It wasn’t until the advent of Hive that tables came into use.

2. Hive

Inspired by the success of the general-purpose big data computation capabilities brought by Hadoop, the next frontier was adding SQL support and enabling more user-friendly analytical usage.

Hive was created by Facebook (now Meta) and open-sourced in 2010. This event was no less revolutionary than the creation of Hadoop. Hive and its ecosystem made it possible for non-engineers to query large amounts of data using SQL. It marked the beginning of analytical big data processing, which ultimately led to the creation of the Data Engineering and Data Analytics fields as we know them today.

Internally, Hive used the MapReduce framework behind the scenes. It would take the query, analyze it, and then create a plan consisting of a series of MapReduce jobs on Hadoop. These jobs were executed by Hive in the specified order. However, the user would not be exposed to these details.

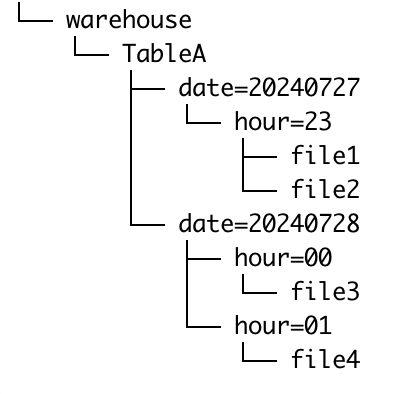

Hive introduced a number of new concepts. First, data was now organized into tables. Internally, each table mapped to a directory in a distributed filesystem, HDFS, but users no longer needed to interact with the raw filesystem. To reduce the data required for a table scan and simplify data loading, tables were manually split into partitions. Each partition contained a portion of the data based on certain fixed criteria, usually all data for the same date or for the same date and hour. These were known as daily and hourly partitioned tables. We’ll return to this layout in the next points when discussing data ingestion.

Second, to maintain the mapping from a table to a directory in a filesystem, along with table metadata such as name and column definitions, Hive introduced a Hive Metastore service. To this day, you might be using an evolved version of the Hive Metastore, such as AWS Glue, to manage tables.

Finally, around this time, file formats began to receive more attention. This is when columnar formats like ORC and Parquet were created and started gaining popularity. Previously, MapReduce used simple row-based formats such as line-by-line text records, SequenceFile, or even CSV files. These formats fit well into the row-by-row processing model of MapReduce and its file-based operations. However, analytical workloads benefit significantly from a columnar layout, including better compression. The Hive engine could now create these columnar files automatically, removing the need to manually read and write them and paving the way for increasing storage format complexity.

It seemed like Hive was a dream come true for analysts and data engineers. What more could you wish for? Unfortunately, there were a number of limitations. For one, Hive SQL queries could take a long time, sometimes hours or even days. This was partly due to its reliance on Hadoop MapReduce, which was not the most efficient method for executing analytical queries. Additionally, there were very limited opportunities to prune the data to be scanned, often requiring the processing of entire tables and partitions. Finally, in Hive, data could only be efficiently ingested by overwriting whole partitions, such as hourly or daily partitions. However, some of these issues began to change with the advent of dedicated analytical engines.

3. Presto, Impala, Spark

Presto is an open-source big data SQL query engine, known for its speed with analytical ad-hoc queries. Presto was created and open-sourced by Facebook (now Meta) in 2013. Impala was a similar effort from Cloudera. Around the same time, Spark moved to the Apache Software Foundation and began gaining major adoption.

Both Presto and Impala were inspired by Google’s Dremel paper, which described interactive analysis on large amounts of data. These systems provided better efficiency and speed, making it possible to query data in Hive tables faster and get query results in minutes, not hours.

Spark brought its share of improvements, contributing to the departure from Hadoop. Its in-memory processing and more developer-friendly APIs made it a widely used big data processing platform, eclipsing Hadoop.

At this point, only HDFS storage from Hadoop remained in use. Its MapReduce compute framework quickly became obsolete, while Parquet began to emerge as a common columnar file format for analytics.

With analytical processing becoming faster and more efficient, addressing one of Hive’s major downsides, attention turned to another key issue: ingestion.

4. Iceberg, Hudi, Delta

As mentioned above, the layout of data in Hive wasn’t particularly flexible. In most cases, these were multi-partitioned tables, such as those partitioned by date and hour, which directly mapped to the underlying directories in the distributed HDFS file system. This layout also had implications for data ingestion and the operations that could be supported.

In particular, the most efficient, safe, and widely adopted method for ingesting data into a Hive table was to use "insert overwrite" into a partition. This approach would create or replace the entire partition at once. Consequently, if a table is partitioned hourly, data would only appear in it after the next hour finishes.

Furthermore, to delete or update a row, you would need to follow the same approach: rewrite all data in the given partition and "insert overwrite" it back in place of the previous data.

This is where Iceberg, Hudi, and Delta came into play. Originally created at Netflix and open-sourced in 2018, Iceberg introduced a new paradigm of table data organization, now known as Table Format. Essentially, it added an additional layer between the raw data files in HDFS/S3 and the table definition in the Hive Metastore. With Iceberg, and similarly with other table formats, tables no longer needed to be split into pre-defined partitions. Iceberg allowed for atomic ingestion of batches of data into a table at any time. "Atomic" here is the key: it guaranteed that the table state remained consistent, preventing issues such as record duplication or data loss.

Internally, Iceberg introduced the concept of a manifest, which logically lists all files belonging to a table. At a high level, when new data files need to be inserted or deleted, it creates a new instance of the manifest that includes the updated set of files and then replaces the table pointer with this updated manifest. As an additional benefit, queries that are still running on the previous set of files will continue without failing, as no files are physically deleted at this point. Periodic compaction, another new concept introduced by Table Formats, cleans up old snapshots, metadata files, and data files, and performs data compaction to optimize both query performance and storage.

The details and capabilities of Table Formats deserve their own write-up, but the main takeaway here is that Table Formats made it much more efficient to add data to tables, significantly improving the ingestion process.

5. Table Catalogs: the next frontier?

After addressing the problem of table layout, we turn our attention to the infrastructure needed to support it. Table Formats can usually use a file system for metadata, such as manifests, and do not necessarily require a catalog service. However, as adoption grew, it became clear that certain operations are more convenient or even possible only with a catalog service.

In addition to storing table metadata, one such example is lock support. Atomic file modifications are crucial for Table Format operations. However, with parallel writes, supporting such updates becomes challenging, potentially leading to failed or retried changes. A catalog service enables more sophisticated locking schemes to address this issue.

Other examples where a catalog service is beneficial include access control and security. This problem is further complicated in environments with multiple engines reading and writing data, requiring interoperability.

As a result, several current efforts have emerged to address these challenges. First, we have traditional catalogs like Hive Metastore and AWS Glue. Newcomers include Snowflake with Polaris Catalog and Databricks with Unity Catalog.

It’s exciting to see how this area will evolve!

Good one!

This fits well with one of my article: https://www.junaideffendi.com/p/data-processing-in-21st-century?utm_source=publication-search